The spread of interactive conversational agents (think Siri, Alexa, Cortana), has provided ample opportunity for fools everywhere to open their hearts and souls to a familiar and seemingly dis-interested tool.

And it’s all crept up on us. We’ve become so used to interacting with search engines through our smartphones — asking of them the most intimate of questions (what are the symptoms of bowel cancer? how do I get rid of my car/my boss/my boils? where can I find love?), questions we may be too shy, or too ashamed to ask either our doctors, or our best friends — that we didn’t notice the creep. The easy availability of a smart, talking-back, front-end to this service-as-usual seems both obvious and inevitable, and it is but a tiny step, a shuffle, no less, in the same direction.

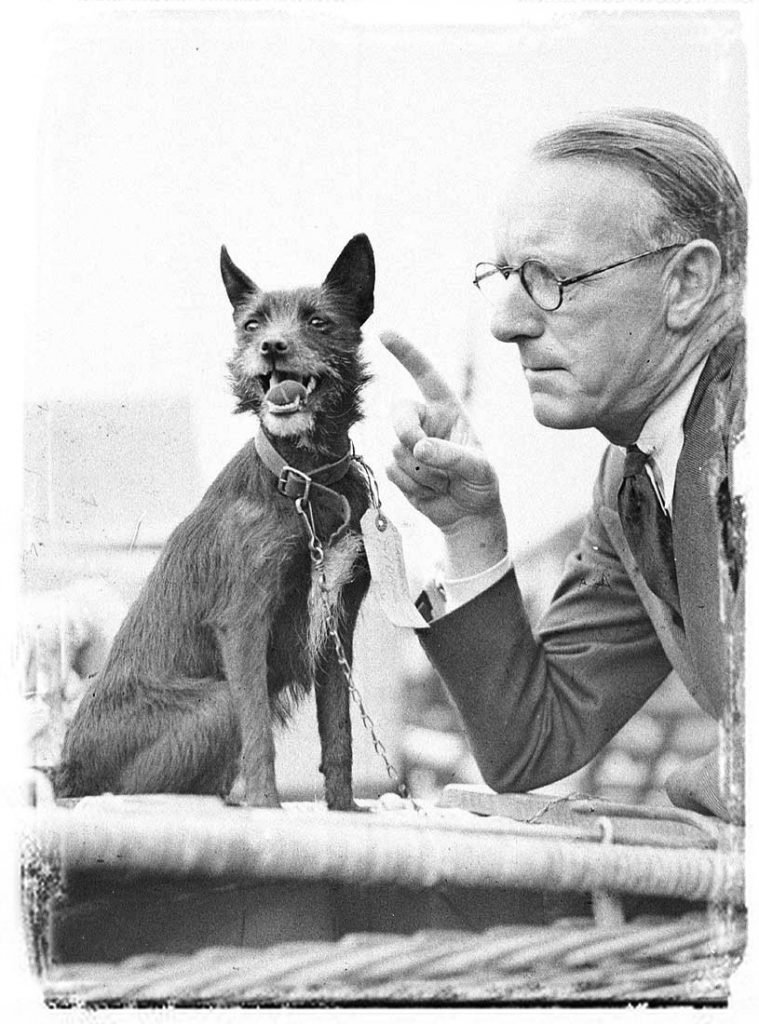

And we trust them, or at least we want to trust them because they keep giving us more frictionless living: turn down the lights, we can say; turn up the music; why am I so lonely? Countless science fictions have instilled in us the notion that technology in general, and AI in particular, is rational and neutral, making it an ideal agony aunt providing personal, private, objective advice.

The spread of behaviours surrounding these services have also afforded much scope for social commentary with pundits keen to dissect the phenomenon and ascribe a trend: the decline of morality, the ascent of depravity, the death of privacy, humanity.

The battle for privacy is long lost, if it was ever seriously contested. A white flag hangs limply in every living-room raised the moment it became easier to ask a brushed aluminium gadget to change channel rather than seek out the TV remote, orient it, find the right button . . . The new battle is ideological and the field of battle is our emotions.

A recent article in Aeon magazine raises some interesting issues about the quantification of emotions, or more specifically the algorithmic response to human feelings. The authors make much of the difference between a Russian conversational agent called Alisa, who delivers a more earthy, “tough love” response, in contrast to the saccharin pap of western bots like Alexa and Google Assistant. Both, they maintain, are reflections of the societies from which they spring, digging into something they term “emotional regimes”, and, ultimately, a reflection of the way the technology works (which is a kind of synthesis of responses formed by munching through buckets of sample data).

So the deeper impact is the cultural assumptions in the data sets and our vulnerability to being “nudged”. “Nudging” is a term which puts a friendly gloss on the practice of behavioral change beloved of government, marketers and tech startups. Not pushing or forcing: nudging. Small, incremental changes that don’t hurt. It’s gentle, almost avuncular, and it implies a social benefit. Why fix the fundemental problems when you can fix the people’s experience of them and make them see it’s really their problem all along.